Cyberspace Administration of China (CAC) has been tasked with ensuring that advanced AI systems align with what the government defines as “core socialist values.” The CCP’s operational guidelines, mandates that AI companies collect and filter thousands of sensitive keywords read more

)

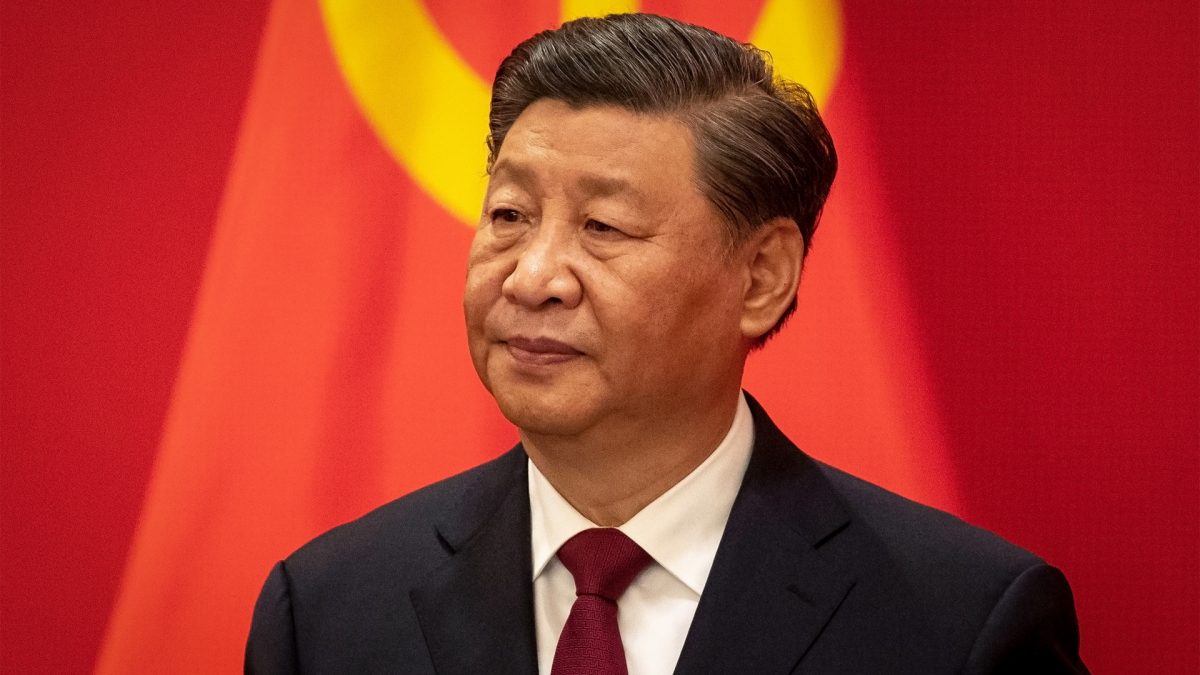

Chinese authorities are pushing for further advancements in safety protocols for LLMs. Image credit: Reuters

Chinese government officials are currently engaged in a stringent testing regime of large language models (LLMs) developed by prominent AI companies such as ByteDance, Alibaba, Moonshot, and 01.AI. This effort is driven by the Cyberspace Administration of China (CAC), tasked with ensuring that these advanced AI systems align with what the government defines as “core socialist values.”

The testing process, as outlined by multiple sources familiar with the matter, involves comprehensive evaluations of how these LLMs respond to a wide range of questions. Many of these queries delve into sensitive political topics, including references to China’s leadership under President Xi Jinping.

The review is not limited to the LLMs’ responses alone but extends to scrutinizing their training data and overall safety protocols. This regulatory scrutiny underscores China’s position at the forefront of global efforts to tightly regulate AI technologies and the content they generate.

According to insights from an employee at a Hangzhou-based AI company, the CAC’s audit process is rigorous and time-consuming. Companies are required to host CAC officials who conduct detailed examinations of their AI models.

Initial failures in the review process often necessitate adjustments based on feedback and consultations with industry peers, adding significant time and effort to the compliance process. This stringent regulatory environment has compelled Chinese AI firms to rapidly develop and implement sophisticated censorship mechanisms to ensure compliance with government standards.

One of the key challenges highlighted by engineers and industry insiders is the need to balance the sophistication of these LLMs with strict adherence to government-mandated censorship. LLMs are designed to handle vast amounts of data, often including content in English, which further complicates the task of aligning outputs with Chinese regulatory requirements.

The Chinese government’s operational guidelines, issued in February, mandate that AI companies collect and filter thousands of sensitive keywords and questions that may violate what the government defines as “core socialist values.” These guidelines are regularly updated, reflecting ongoing adjustments to censorship standards in response to evolving political sensitivities.

The practical implications of these regulatory requirements are evident in the user experience of AI chatbots in China. Chatbots often deflect questions related to historically sensitive events such as the Tiananmen Square massacre or humorous comparisons involving President Xi Jinping. Instead of providing direct answers, these chatbots suggest alternative queries or indicate limitations in their ability to respond effectively, demonstrating the extent of censorship enforced by these AI systems.

Interestingly, while the CAC imposes stringent testing requirements to ensure compliance before deployment, there are indications that once LLMs are live, oversight becomes less rigorous. This has led some AI models to implement preemptive measures, such as outright bans on discussing certain topics related to Chinese political leadership, to avoid potential regulatory issues.

In response to less overtly sensitive inquiries, developers have integrated additional layers of filtering and real-time response modification. This approach involves leveraging classifier models akin to spam filters to categorize LLM outputs and replace potentially problematic responses with safer alternatives, thereby minimizing compliance risks.

ByteDance, notably, has been recognized for making significant strides in aligning its AI models with Beijing’s narrative preferences. A research assessment conducted at Fudan University ranked ByteDance’s LLMs highly for safety compliance, showcasing the company’s efforts to navigate the complex regulatory landscape effectively.

Chinese authorities are pushing for further advancements in safety protocols for LLMs. Fang Binxing, a key figure in China’s internet regulation known for developing the “great firewall,” emphasized the need for robust real-time online monitoring systems to complement existing safety filings. This underscores China’s commitment to developing a distinctive technological framework that meets both regulatory requirements and strategic national interests.

2 months ago

47

2 months ago

47

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

English (US) ·

English (US) ·