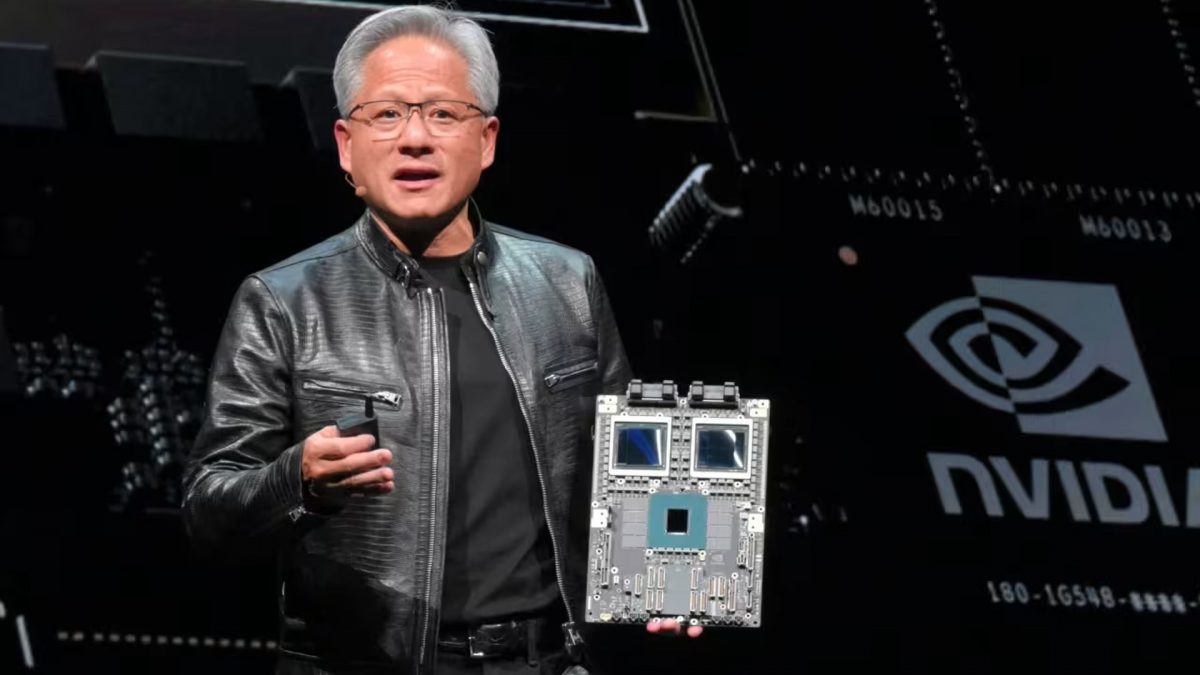

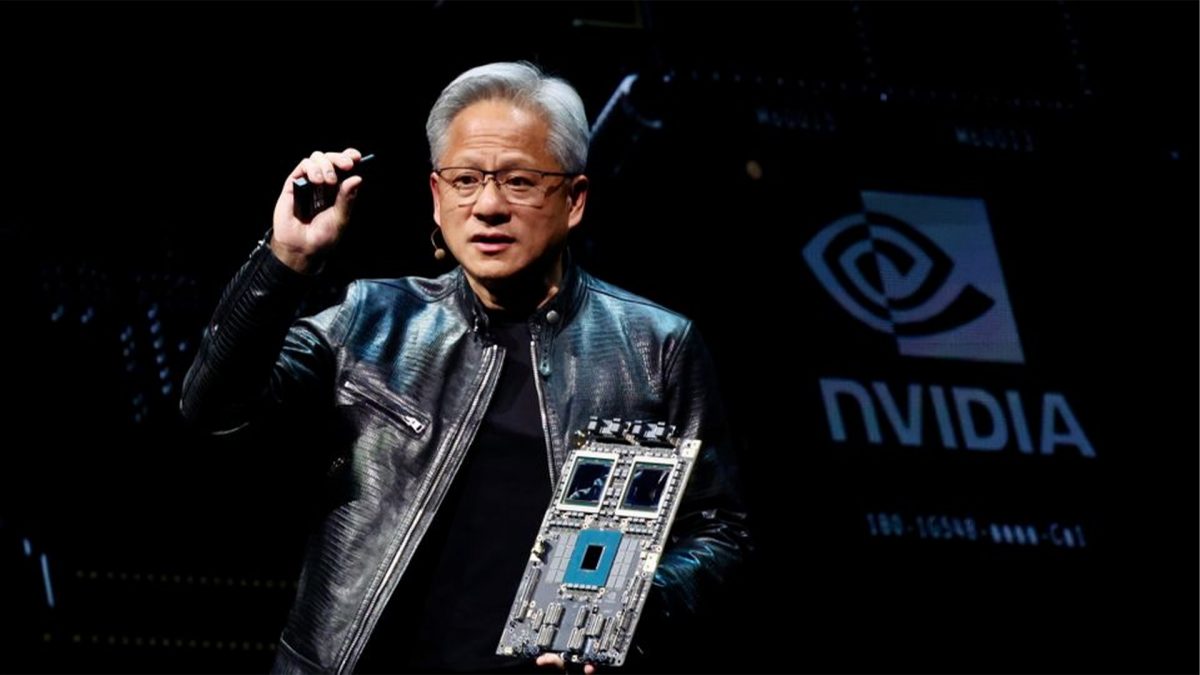

Training Musk’s Grok 2 model required about 20,000 NVIDIA H100 GPUs, and future iterations, such as the Grok 3 model, will necessitate approximately 100,000 NVIDIA H100 chips read more

)

Musk has indicated that Grok 3 model and future versions would require even more resources, with an estimated need of 100,000 NVIDIA H100 chips. Image Credit: AP

Dell Technologies and Super Micro have announced the creation of “AI factories” to house NVIDIA GPUs, which will be instrumental in training xAI’s Grok models. This ambitious project is part of a larger effort spearheaded by Elon Musk to advance AI capabilities through substantial investments in computational resources.

Michael Dell, CEO and chairman of Dell Technologies revealed on social media platform X that the company is developing the Dell AI Factory. This initiative will leverage NVIDIA GPUs to power Grok, the AI model developed by Elon Musk’s AI company, xAI.

Musk has made significant investments in AI infrastructure, acquiring tens of thousands of GPUs in 2023 alone. Specifically, training the Grok 2 model required about 20,000 NVIDIA H100 GPUs, and future iterations, such as the Grok 3 model, will necessitate approximately 100,000 NVIDIA H100 chips.

Musk has also shared plans for a new supercomputer set to be operational by fall 2025.

Dell is responsible for assembling half of the racks for xAI’s supercomputer, as confirmed by Musk on X. When asked about the other partner in this venture, Musk identified “SMC,” referring to Super Micro, a San Francisco-based server manufacturer renowned for its collaborations with chip firms like NVIDIA and its advanced liquid-cooling technology. Super Micro has confirmed its partnership with xAI to Reuters.

There is also speculation about collaboration with Oracle to construct an extensive AI system. According to a report by The Information in May, Musk informed investors that xAI is planning to build a supercomputer to support the next version of its AI chatbot, Grok.

This supercomputer, powered by an interconnected array of NVIDIA H100 GPUs, would be at least four times larger than the largest existing GPU clusters.

In April 2023, xAI introduced Grok-1.5V, a first-generation multimodal model with strong text capabilities and the ability to process various visual information, including documents, diagrams, charts, screenshots, and photographs. The training of such advanced AI models requires tens of thousands of power-hungry chips, which are currently in short supply.

Earlier this year, Musk highlighted the significant computational demands of training the Grok 2 model, which utilized about 20,000 NVIDIA H100 GPUs. He noted that the subsequent Grok 3 model and future versions would require even more resources, with an estimated need of 100,000 NVIDIA H100 chips. This enormous demand underscores the scale of Musk’s AI ambitions and the substantial infrastructure required to support them.

Meanwhile, other tech giants are also investing heavily in AI infrastructure. Meta’s AI chief Yann LeCun revealed that Meta has secured $30 billion worth of NVIDIA GPUs to train their own AI models. This indicates a broader industry trend towards massive investments in AI hardware to support the next generation of AI technologies.

The partnership between Dell, Super Micro, and xAI represents a significant step forward in AI development. The creation of AI factories equipped with state-of-the-art NVIDIA GPUs will provide the computational power necessary to train sophisticated AI models like Grok, potentially transforming the landscape of artificial intelligence and its applications. As these projects progress, the tech industry will be closely watching the advancements and innovations that emerge from these AI powerhouses.

3 months ago

22

3 months ago

22

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

English (US) ·

English (US) ·