To verify the caller’s identity, the executive asked a specific question about Chief Executive Officer Benedetto Vigna, the man the scammers claimed to be. The answer was something only Vigna could answer read more

)

Earlier this month, a Ferrari NV executive received a series of unexpected messages, seemingly from the CEO. The messages, appearing to be from Chief Executive Officer Benedetto Vigna, hinted at a significant acquisition and requested the executive’s assistance. Despite appearing legitimate, these messages raised suspicion as they didn’t come from Vigna’s usual business number, and the profile picture, though it depicted Vigna, was slightly different.

“Hey, did you hear about the big acquisition we’re planning? I could need your help,” one message read. Another message urged the executive to be ready to sign a Non-Disclosure Agreement that a lawyer would send soon, mentioning that Italy’s market regulator and the Milan stock exchange had already been informed.

According to sources familiar with the incident, what followed was an attempt to use deepfakes to carry out a live phone conversation and infiltrate Ferrari. The executive, who received the call, quickly sensed something was amiss, preventing any potential damage. The voice impersonating Vigna was convincing, mimicking his southern Italian accent perfectly.

The impersonator explained that he was calling from a different number due to the confidential nature of the discussion, which involved a deal with potential complications related to China and required an unspecified currency-hedge transaction. Despite the convincing act, the executive grew more suspicious, noticing subtle mechanical intonations in the voice.

To verify the caller’s identity, the executive asked a specific question: What was the title of the book Vigna had recently recommended? The call ended abruptly when the impersonator couldn’t answer. This incident prompted Ferrari to launch an internal investigation, though company representatives declined to comment on the matter.

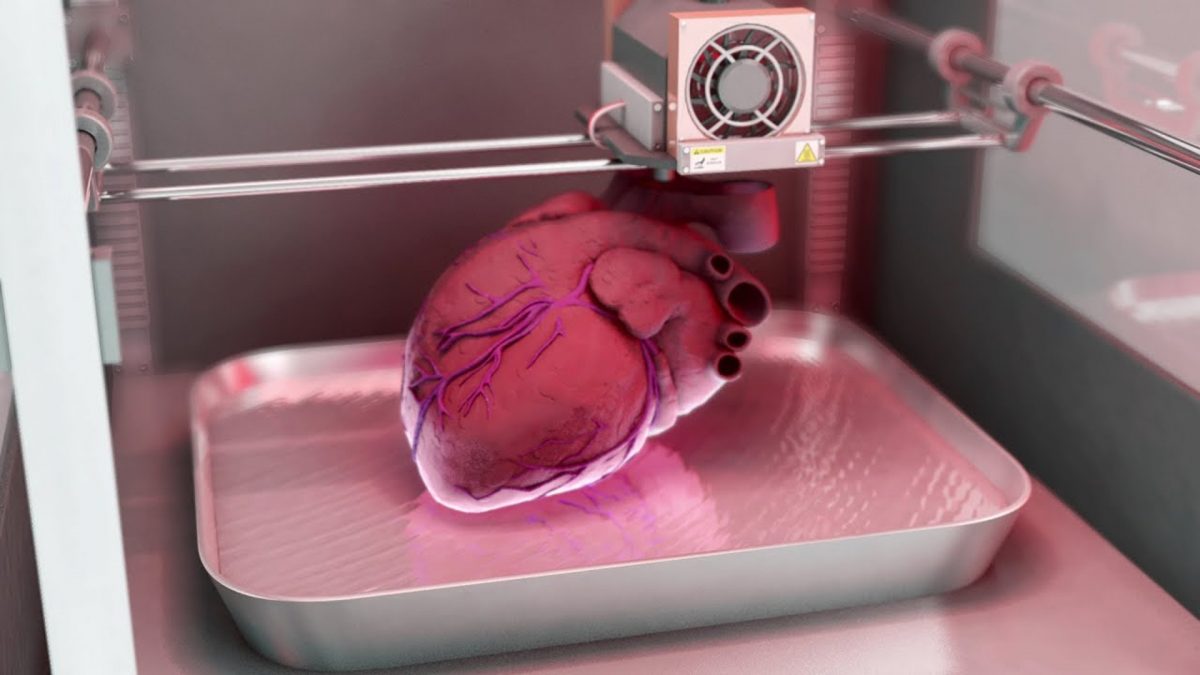

This event is part of a growing trend where criminals use deepfake technology to impersonate high-profile executives. In a similar incident, Mark Read, CEO of advertising giant WPP Plc, was targeted by a deepfake scam on a Teams call.

Rachel Tobac, CEO of cybersecurity training company SocialProof Security, noted that there has been an increase in attempts to use AI for voice cloning this year. While these generative AI tools can produce convincing deepfake images, videos, and recordings, they haven’t yet caused the widespread deception many fear.

However, some companies have fallen victim to such scams. Earlier this year, an unnamed multinational company lost HK$200 million ($26 million) after scammers used deepfake technology to impersonate its chief financial officer and other executives in a video call, convincing the victim to transfer money.

Companies like CyberArk are already training their executives to recognize bot scams. Stefano Zanero, a cybersecurity professor at Italy’s Politecnico di Milano, warned that AI-based deepfake tools are expected to become increasingly accurate and sophisticated, posing a growing threat to businesses worldwide. As these tools advance, the need for heightened awareness and training in detecting such scams becomes ever more crucial.

1 month ago

18

1 month ago

18

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

English (US) ·

English (US) ·