V2A is designed to work seamlessly with Veo, Google’s text-to-video model that was showcased at Google I/O 2024. V2A uses a diffusion model trained on a mix of sounds, dialogue transcripts, and videos read more

)

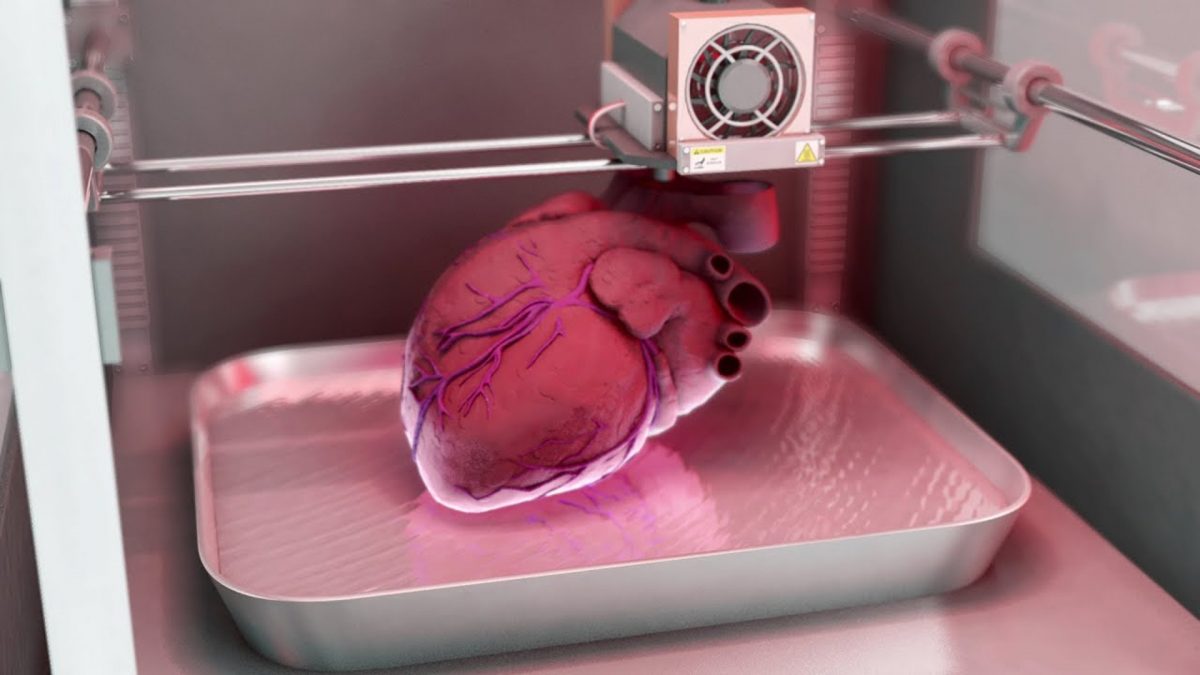

One of the coolest features of V2A is its ability to generate an unlimited number of soundtracks for any video. Users can tweak the audio output with 'positive prompts' and 'negative prompts' to get the sound just right. Image Credit: Pexels

Creating videos from text prompts is becoming more simple thanks to models like Sora, Dream Machine, Veo, and Kling. However, many of these tools have a major drawback: they can’t generate sound, leaving us with silent videos. But Google DeepMind is stepping up to tackle this issue with their latest innovation: a new AI model that can create soundtracks and dialogue for videos.

In a recent blog post, Google DeepMind introduced V2A (Video-to-Audio), an exciting AI model that merges video visual cues with text prompts to create rich sound and audio. This new technology aims to transform the way we create and experience AI-generated videos, by adding dramatic music, realistic sound effects, and matching dialogue.

V2A is designed to work seamlessly with Veo, Google’s text-to-video model that was showcased at Google I/O 2024. This combination allows users to enhance their videos not just visually but also audibly. V2A can add sound to anything from modern videos created with Veo to silent films and old archival footage, bringing them to life in a whole new way.

One of the coolest features of V2A is its ability to generate an unlimited number of soundtracks for any video. Users can tweak the audio output with ‘positive prompts’ and ’negative prompts’ to get the sound just right. Plus, every piece of generated audio is watermarked with SynthID technology to ensure it’s original and authentic.

V2A uses a diffusion model trained on a mix of sounds, dialogue transcripts, and videos. While the model is powerful, it wasn’t trained on a massive number of videos, so sometimes the audio might come out a bit off. Because of this, and to prevent any potential misuse, Google isn’t planning to release V2A to the public anytime soon.

The introduction of V2A by Google DeepMind is a significant step forward in video creation technology. By adding sound and dialogue, V2A fills a crucial gap, making videos more immersive and engaging. Although it’s still in the works and not yet available for public use, V2A shows incredible promise for the future of video production.

3 months ago

18

3 months ago

18

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

English (US) ·

English (US) ·