Google acknowledges that content removal isn’t foolproof. Therefore, it has updated its ranking system to lower the visibility of explicit fake content and associated websites in search results read more

)

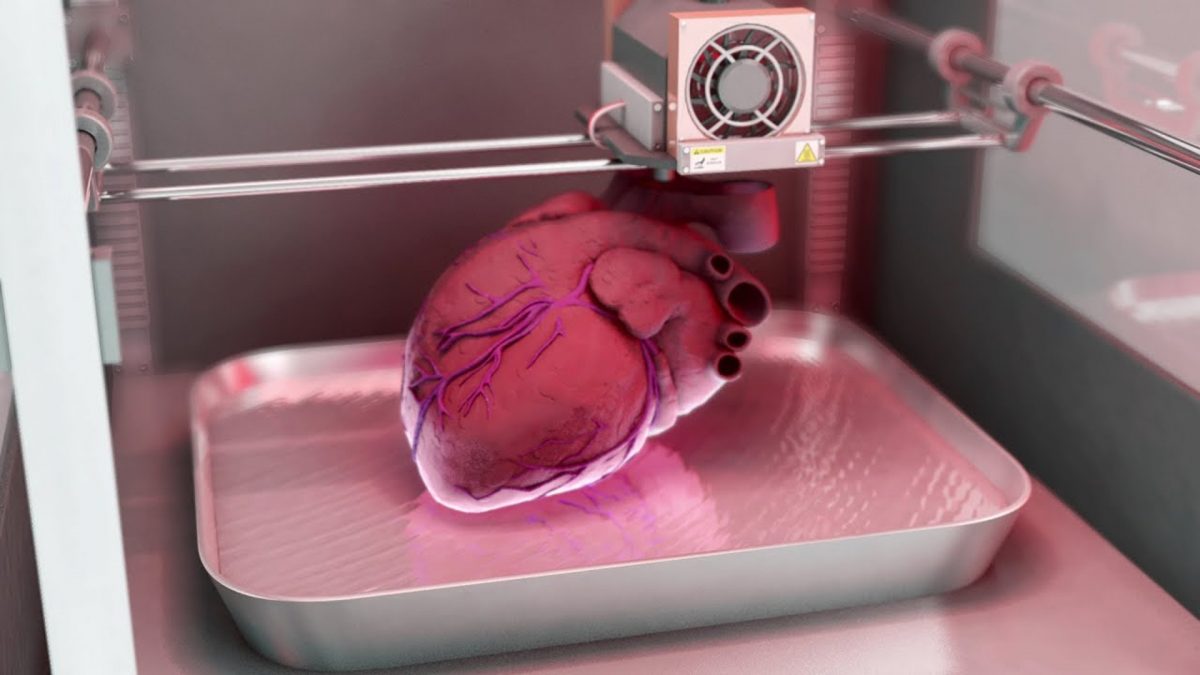

Google's system will automatically identify and remove duplicates of the image and related content, addressing the widespread nature of such images across the internet. Image Credit: Pexels

Google is amping up its efforts to remove explicit AI-generated deepfake images and videos from its search results. The tech giant aims to ensure that non-consensual deepfakes are kept out of its search engine. Because of the offensive nature of these images, Google is committed to pushing them far from the first page of search results, if complete removal isn’t feasible.

Google has a history of allowing individuals to request the removal of explicit deepfakes. However, the rise in generative AI image creation has necessitated more robust measures. The request system has been streamlined for easier and quicker submission and processing.

Once a request is validated, Google’s algorithms will also filter out similar explicit results linked to the individual. This means victims don’t need to manually search for every variation that might display the content.

Google’s system will automatically identify and remove duplicates of the image and related content, addressing the widespread nature of such images across the internet.

On YouTube, Google has enhanced its measures to combat unauthorised deepfakes. Previously, YouTube merely labelled AI-created or misleading content. Now, individuals depicted in such videos can submit a privacy complaint, prompting YouTube to give the video’s owner a few days to remove it before reviewing the complaint.

Despite these efforts, Google acknowledges that content removal isn’t foolproof. Therefore, it has updated its ranking system to lower the visibility of explicit fake content and associated websites in search results.

For instance, when searching for news about a celebrity’s deepfakes and their testimony before lawmakers, Google will prioritize news stories and related articles over deepfakes.

Platforms like Facebook and Instagram have updated their policies to address AI-generated explicit content. Meta’s Oversight Board recently recommended changes to cover such content and improve the appeals process.

Legislators are also responding. New York State’s legislature has passed a bill targeting AI-generated non-consensual pornography under its “revenge porn” laws. At the national level, the U.S. Senate introduced the NO FAKES Act of 2024 to address explicit content and non-consensual use of deepfake visuals and voices. Similarly, Australia is working on a bill to criminalize the creation and distribution of non-consensual explicit deepfakes.

Google reports some success in reducing explicit deepfakes, claiming that early tests with these changes have cut the appearance of such images by over 70 per cent. However, Google recognises that more work is needed.

It’s important to remember that just because something doesn’t appear in search results doesn’t mean it doesn’t exist. The nature of the internet means that once something is online, it’s virtually impossible to remove it entirely. This underlines the importance of proactive and ongoing efforts to manage and mitigate the spread of harmful content.

1 month ago

31

1 month ago

31

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

English (US) ·

English (US) ·