We ask an industry veteran, Rahul Mahajan, Chief Technical Officer of Nagarro, one of the world’s fastest growing end-to-end solutions provider in the IT space, about where does India’s AI dreams stand, and what challenges are businesses facing with AI read more

)

Relying on AI to attract new customers, but the sustainability of this model is questionable given the escalating expenses. Image credit: Pixabay

India’s IT sector has undergone a remarkable transformation, and Rahul Mahajan, Nagarro’s CTO, has had a front-row seat to these changes. Moreover, he has a very impressive resume — he has more than 15 granted patents worldwide and specializes in different emerging technology areas like digital platform clouds, AI, Machine Learning, Quantum Computing, Hyper Scalers Clouds, IoT, etc

naturally, then, he is perfectly qualified to answer the question, of how India’s computing needs evolved, particularly in the realm of AI over the years, and where are we heading.

“India proudly stands on a robust IT and digital foundation, characterized by innovations such as Aadhaar, UPI, DigiLocker, extensive mobile penetration, and widespread access to micro-banking. These advancements have laid the groundwork for the rapid evolution of AI needs in the country,” he says

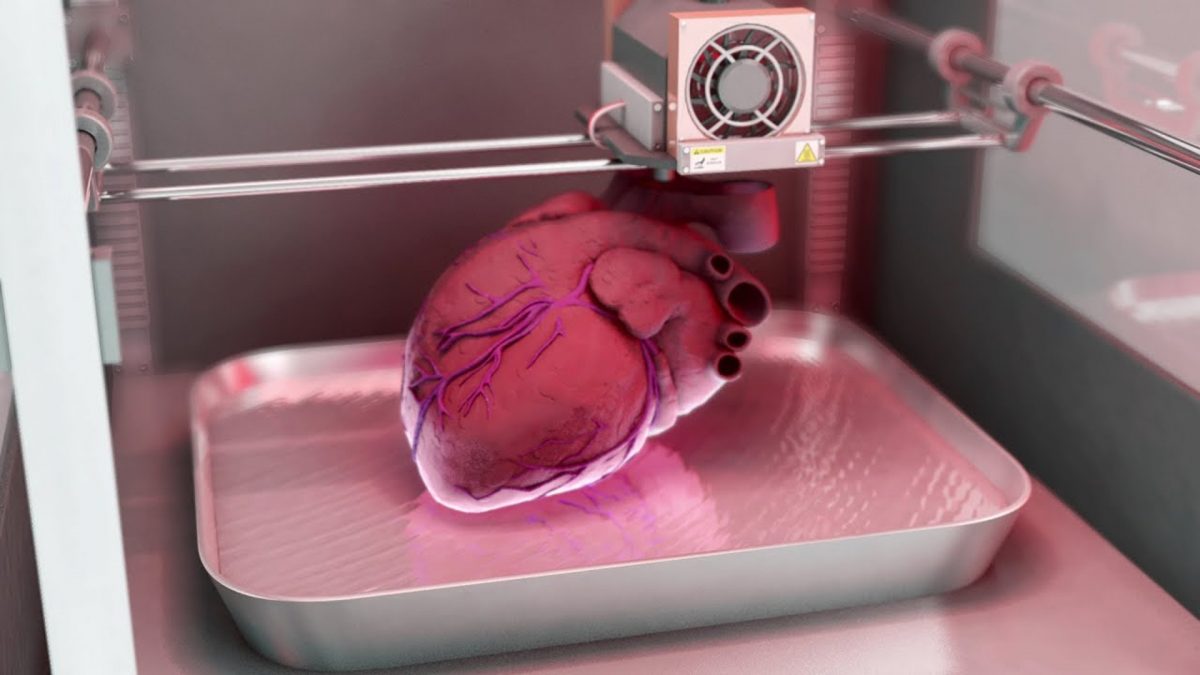

In healthcare, AI is driving significant changes by enhancing the reach and efficiency of medical services. With Aadhaar integrated into health initiatives like the Ayushman Bharat Health Network, a connected healthcare system that includes health, insurance, and banking is becoming a reality.

AI on devices and cloud platforms can facilitate early disease detection, providing a lifeline to millions by identifying health issues at nascent stages. “We are on the brink of achieving AI-led early disease detection through integrated medical record systems like ABHA (Ayushman Bharat Health Account). This integration promises to revolutionize healthcare delivery, making it more efficient and responsive to the needs of the masses,” he adds.

Similarly in education, AI is set to democratize access to high-quality learning resources. The dream of ’education for all’ is becoming tangible, with AI providing simplified access to global educational content. “This technological leap is particularly significant for students in rural and underserved areas, who can now learn in their local languages through voice-assisted AI tools. A well-educated and skilled youth population will be instrumental in propelling India’s growth to new heights, fostering innovation and driving economic progress,” added Mahajan.

We asked the veteran CTO where AI stands today, and what challenges it is looking at, in order to understand, what’s up with India’s AI dream. Edited excerpts:

Firstpost: What are the top challenges of a business today when deploying AI?

Rahul Mahajan: Deploying AI in business operations presents significant challenges that must be addressed to fully leverage the technology’s potential. Some of the primary hurdles organizations face today are:

AI Governance and Responsible AI: One of the foremost challenges is the lack of investment in AI governance and responsible AI practices. Without proper oversight, AI systems can produce biased or unpredictable outcomes, leading to a lack of trust and acceptance among stakeholders. Establishing robust AI governance frameworks is essential to ensure transparency, fairness, and accountability in AI applications.

Data privacy and security: Data privacy and security infrastructure are critical yet often overlooked areas. Protecting sensitive data from breaches and unauthorized access is crucial, especially when handling vast amounts of information required for AI models. Businesses must invest in advanced security measures and comply with data protection regulations to safeguard user data and maintain trust.

Data silos: In both B2B and B2C contexts, data collection infrastructure is frequently fragmented, with production, supply chain, inventory, marketing, sales, and consumer data managed by separate teams. This siloed approach hinders effective data integration and limits the potential of AI applications.

Technological fragmentation: Different technologies used by web, mobile, and call centres contribute to data fragmentation, complicating AI deployment. Businesses need to invest in cohesive technology stacks that facilitate data flow and support AI-driven decision-making.

Scalability and ROI: AI use cases are inherently data and computation-intensive. Implementing AI in isolated silos often results in suboptimal ROI and limited accuracy. Scaling AI applications across the organization requires a holistic approach, ensuring that all relevant data is integrated and utilized effectively.

Security and non-explainability: Security concerns, data breaches, and the non-explainability of AI predictions are significant barriers. These issues can lead to poor productivity, inefficiency, and diminished consumer trust, ultimately impacting product and service quality. Businesses must prioritize security measures and develop explainable AI models to mitigate these risks and enhance user confidence in AI systems.

Talent and skill gap: The talent required to develop and deploy AI solutions is scarce and expensive. Policymakers and educational institutions need to emphasize AI education at all levels, equipping students with the necessary technical knowledge and fostering a skilled workforce ready to tackle AI challenges.

A three-pronged approach is recommended to channel AI research effectively:

Advancing core AI research to push the boundaries of what AI can achieve.

Developing and deploying application-based research to solve practical business problems.

Establishing international research collaborations to address global challenges in healthcare, agriculture, and climate change using AI.

FP: Thanks to OpenAI, Google and Meta, every CEO on the planet believes that their AI deployment has to have some component of LLM in it. How should a CTO have that conversation, that instead of an LLM, they may need an LAM?

RM: While LLMs are powerful, they may not always be the best fit for every application. A Language Action Model (LAM), which focuses on generating actionable insights and connecting various services, can often provide a more practical and effective solution.

According to Nagarro’s 2024 digital trends, Generative AI Playbooks are set to revolutionize digital experiences. These playbooks are computationally generated, AI-agent-assisted solution recipes designed to tackle complex B2B and B2C problems. They offer a range of capabilities:

Interactivity and simulations: Generative AI Playbooks can run simulations and are interactive, allowing users to test different scenarios and outcomes.

Digital Twin integration: These playbooks are connected to digital twins, providing a real-time, dynamic representation of physical assets and processes.

LLM support and human language compatibility: They are supported by LLMs and can communicate in human language, making them accessible and easy to use.

The key to transitioning from LLMs to LAMs lies in enhancing the user’s digital experience. LAMs provide a comprehensive and actionable digital experience that LLMs alone cannot match. For instance, consider a college student seeking a wellness solution. An integrated AI playbook could offer:

A 360-degree wellness plan: Including diet, fitness, allergies, and nutrition, tailored to the student’s needs.

Extended services: Connecting the student with partners for additional services such as counselling, fitness training, and dietary consultations.

Progress tracking and adjustment: Continuously monitoring the student’s progress and making necessary adjustments to the plan.

Creating such a playbook requires orchestrating various components seamlessly while ensuring simplicity for the user. This smart orchestration is where LAMs shine, providing a cohesive and intuitive experience.

For enterprises, adopting LAMs involves implementing solutions with the right level of governance, observability, and explainability. These design principles are critical for maximizing the value of AI solutions and ensuring they meet business objectives. When discussing AI deployment with CEOs and other CXOs, it is essential to emphasize how LAMs can intelligently integrate various products, services, and ecosystem elements to maximize utility value. Highlight the disruptive potential of Digital Experience LAMs in delivering a comprehensive, action-oriented digital experience. Additionally, stress the importance of governance, observability, and explainability in implementing enterprise-ready AI solutions.

As a CTO, steering your organization towards adopting LAMs rather than solely relying on LLMs can result in more effective and actionable AI deployments. By focusing on enhancing the depth of user digital experience, smart orchestration, and enterprise-ready solutions, one can ensure that AI initiatives deliver maximum value and drive business success.

FP: The cost of training AI models is getting out of hand. Inference costs are also creeping up. At the same time, many service-based businesses are trying to acquire new customers through the promise of AI. Is this a sustainable model? If not, how do we manage high computational costs?

RM: The rapidly increasing costs of training and inference for AI models, particularly LLMs, are becoming a significant concern for many service-based businesses. They often rely on AI to attract new customers, but the sustainability of this model is questionable given the escalating expenses. Some strategies companies can apply to manage and reduce these high computational costs effectively include:

Effective deployment of knowledge graphs: By leveraging knowledge graphs, businesses can improve the efficiency of AI models. Knowledge graphs organize information in a structured way, allowing AI systems to access relevant data more quickly and accurately, thus reducing the need for extensive real-time computation. For example, in customer service applications, knowledge graphs can help AI systems quickly find answers to customer queries by accessing pre-structured data, minimizing the need for intensive computations.

Advanced grounding for hyper-contextualization: Advanced grounding techniques enable AI models to understand and process context more effectively. By grounding AI in specific contexts, the models can provide more accurate responses without requiring extensive computation. This reduces the overall computational load, as the models can rely on contextual understanding to deliver relevant information without processing large amounts of data in real time.

Effective vector embedding lookups: Using efficient vector embedding lookups can significantly reduce the computational requirements of AI models. Vector embeddings represent data in a compact form, allowing AI systems to perform lookups and computations more efficiently. Optimizing vector embeddings can minimize the need for real-time computations, as the models can quickly retrieve relevant information from pre-computed embeddings.

Sustainability of AI models in service-based businesses: Ensuring a sustainable AI model involves several key considerations. First, businesses must balance investment and ROI by conducting thorough cost-benefit analyses, considering the long-term benefits of AI deployment against the initial and ongoing costs. Strategic investment is crucial, focusing on areas where AI can deliver the most significant impact and cost savings. Additionally, developing scalable AI solutions that can grow with the business is essential. This means creating AI models capable of handling increasing volumes of data and users without a corresponding exponential increase in costs. Adopting modular AI architectures also allows businesses to scale specific components as needed, reducing unnecessary computational expenses.

Cloud and edge computing: Utilizing a combination of cloud and edge computing can help manage computational costs. Edge computing allows for processing data closer to its source, reducing the need for expensive cloud-based computations. Cloud services offer scalable resources, but businesses must monitor and optimize their usage to avoid excessive costs. Hybrid models can balance the benefits of both cloud and edge computing.

Efficient model training and inference: Reducing the frequency of model retraining and focusing on incremental updates can lower training costs. Employing techniques such as transfer learning and few-shot learning can also make training more efficient. Optimizing inference processes through methods like model pruning, quantization, and distillation can significantly reduce the computational load and associated costs.

FP: There is a lot of conversation going on around Sovereign AI. Also, considering how LLMs are being developed as of now, what challenges do you see in developing sovereignty?

RM: In my experience, a hybrid approach to development is the first step. While the core foundational model may be a global and public instance, subsequent grounding can be sovereign, which generally provides a win-win from a customer experience perspective.

Developing a sovereign version from the ground up is feasible but will require significant effort and cost. With the maturation of open-source models, particularly from Meta and others, sovereign grooming could become equally interesting. Typically, it takes more than a couple of million dollars to train one LLM cluster, so a hybrid model may be a more realistic option.

FP: The C-suite or business leaders who may or may not be that proficient with AI or even tech in general are very excited about implementing AI in their businesses. How should CIOs approach them and ensure that AI is implemented in the right direction and at the right avenues?

RM: Business leaders are increasingly enthusiastic about the potential of AI, even if they may not fully understand its intricacies. A CIO’s role is crucial in channelling this excitement into strategic, impactful AI initiatives.

By focusing on building a robust data infrastructure, enhancing the digital experience, educating leaders, aligning AI with business objectives, fostering a collaborative culture, and driving effective change management, you can ensure that AI is deployed effectively and strategically. This approach will help harness the full potential of AI, driving innovation and growth while maintaining alignment with the organization’s goals.

FP: Finally, the question that’s on everyone’s mind: In what ways will AI change employment and jobs as we know it today?

RM: AI will create multiple unique and new job avenues, benefiting society in the mid to long term. Shifts are already visible, particularly in data security, data governance, invisible payments, and touchless interfaces like DigiYatra; these are just initial examples. While we may feel nervous, history shows that humans have always adapted to disruptive changes. Robotics, immersive AI, and connected IoT mark the beginning of a new and exciting era.

2 months ago

31

2 months ago

31

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

English (US) ·

English (US) ·